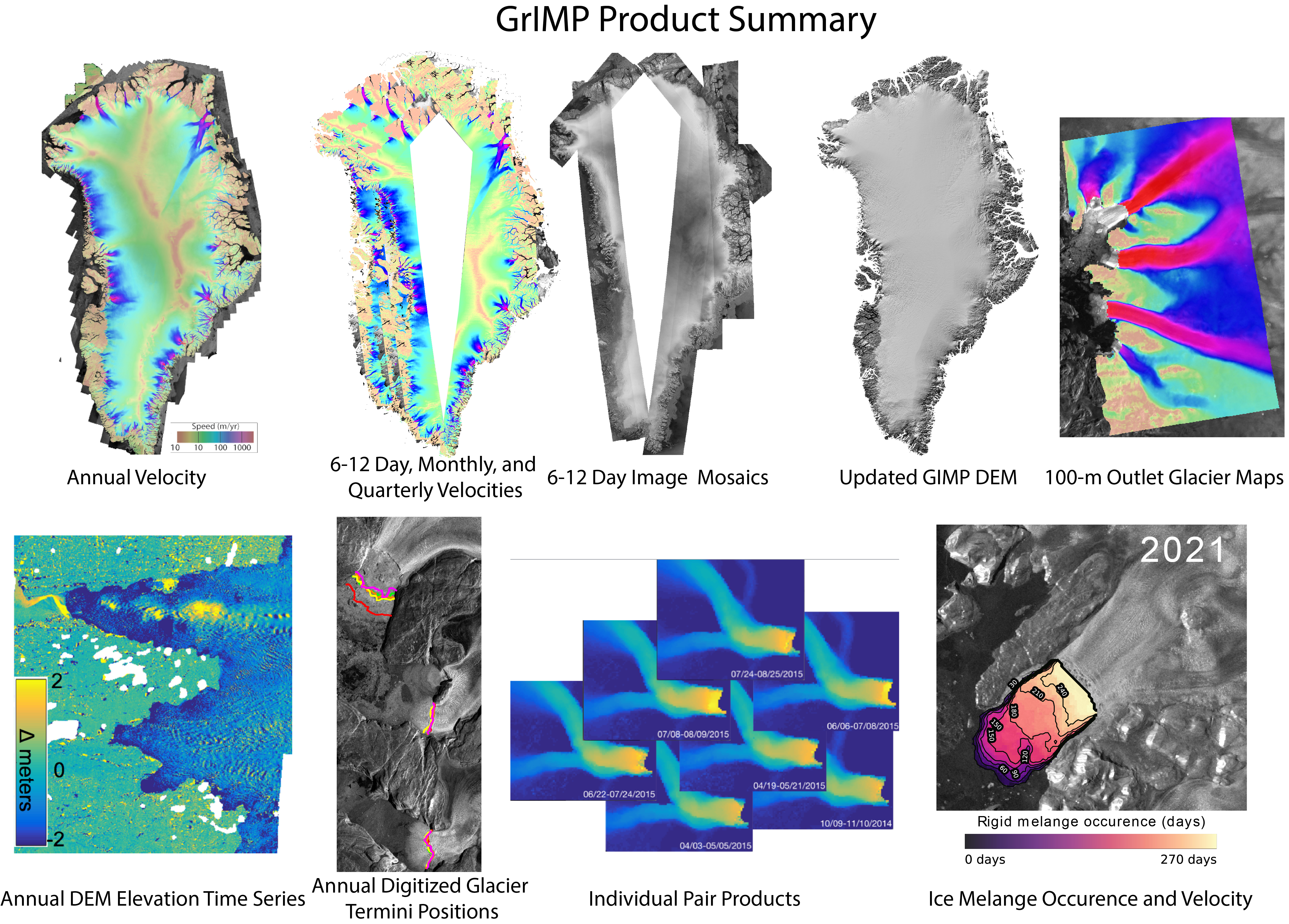

GrIMP Products¶

After more than 15 years, there is large volume (>2TB) of Greenland DEMs, velocity maps, and SAR mosaics at NSIDC.

GrIMP Overview and Tutorial Goals¶

This notebook illustrates some of the various tools and capabilities available to access and explore imagery and velocity data products from the Greenland Ice Mapping Project GrIMP.

Specifically, we will use the functionality of the nisarVelSeries classes for working with GrIMP velocity products.

This notebook will also include examples of how to quickly explore imagery and velocity mosaics, formatted as cloud optimized GeoTIFF (more on that below) to interactively select and download subsets of GrIMP imagery (NSIDC-0723) and velocity (NSIDC-481, 0725, 0727, 0731, 0766) data. For the Sentinel-based velocity mosaics (0725, 0727, 0731), a user can select a box on a map and choose which components are downloaded (vv, vx, vy, ex, ey, dT) and saved to a netCDF file.

All GrIMP notebooks and associated code can reached from the GrIMP overview repository

Tools that allow users to extract time series for their regions of interest in manageable size volumes are essential.

Key Features¶

Several features of these classes are useful for working with the GrIMP data as described below.

Cloud Optimized Geotiffs¶

Much of the functionality described here relies on the use of Cloud-optimized Geotiffs (COGs) for GriMP products, which have the following properties:

- The header with information about how the data are organized is all located at the beginning of the file. As a result, a result a single read provides all of the information about how the data are organized in the file. In some tiffs this information is distributed throughout the file, requiring many read operations to retrieve all of the metadata.

- The data are tiled (i.e., stored as a series of blocks like a checkerboard) rather than as a line-by-line raster. The tiling facilitates faster access to limited subsets. For example, to read a 50-by-50 km region from a Greeland mosaic, only a few tiles that cover that region need to be downloaded. By contrast, with conventional rastering, a 50km-by-1500km (~width of Greenland mosaics) would have to read (up to 30x more data) and then cropped to the desired width of 50km.

- A consistent set of overview images (pyramids) are stored with the data, allowing low-resolution images to be quickly extracted to create figures where the full resolution is not required (e.g., for inset figures).

Built on Xarray¶

Xarray is a powerful python tool that bundles metadata with data stored in NumPy arrays. While extremely powerful, it can be cumbersome for novice users. The classes described below are designed so that users can take advantage of the full functionality of Xarray, or bypass it entirely and access the data using either as numpy arrays or methods that perform tasks commonly applied to the data (interpolation, basic statistics, etc).

Dask¶

Dask is a program for applying parallel operations using Xarray, NumPy, and other libraries. Dask functionality is included implicitly in these libraries as well as the classes described below. It builds on the lazy-open capabilities in that it can operate on the metadata and cue up several complex operations before actual data download, providing the advantages of parallelism in a way that is transparent to the user.

Local and Remote Subsetting¶

After one of the classes described below performs a lazy open (e.g., a Greenland-wide map), often the next task is to apply a subsetting (cropping) operation to limit access to only the region of interest. All subsetting operations are non-destructive with respect to the full data set, so multiple subsetting operations can be applied in series.

After a subset is created, it initially retains its lazy-open status, which in many cases is a convenient way to work. For many operations, the data will automatically be downloaded as needed (e.g., for a plot). But if the data are used multiple times, it is better to explicitly download the data to avoid multiple downloads of the same data, which can greatly slow operations. In some cases, the system cache will retain the data and void repeat downloading, but large datasets can cause the cache to quickly be flushed and trigger redundant downloads. If there is sufficient memory, multiple downloads can be avoided by explicitly downloading the data to local memory as described below.

Subsets Can Be Saved For Later Use¶

Subsets can be written to netCDF files and re-read for later use.

Environment setup¶

Generally, GrIMP notebooks use a set of tools that have been tested with the environment.yml in the binder folder of the GrIMP repository. Thus, for best results when using GrIMP notebooks in the future and in local instances, create a new conda environment to run this and other other GrIMP notebooks from this repository. After downloading the environment.yml file, enter the following commands at the command line to prepare your environment. For today’s tutorial in CryoCloud, we do not need to perform these steps.

conda env create -f binder/environment.yml

conda activate greenlandMapping

python -m ipykernel install --user --name=greenlandMapping

jupyter lab

See NSIDCLoginNotebook for additional information.

The notebooks can be run on a temporary virtual instance (to start click binder). See the github README for further details.

Specialty GrIMP product imports¶

GrIMP imports¶

try:

import grimpfunc as grimp

except Exception:

%pip install -q git+https://github.com/fastice/grimpfunc.git@master

import grimpfunc as grimp

#

try:

import nisardev as nisar

except Exception:

%pip install -q git+https://github.com/fastice/nisardev.git@main

import nisardev as nisarCommon package imports¶

# Ignore warnings for tutorial

import warnings

warnings.filterwarnings("ignore")

import geopandas as gpd

import pandas as pd

import numpy as np

import os

import matplotlib.pyplot as plt

from IPython.display import Image

import dask

from dask.diagnostics import ProgressBar

ProgressBar().register()

dask.config.set(num_workers=2)

import panel

panel.extension()

from datetime import date, datetime

from shapely.geometry import box, Polygon

%load_ext autoreload

%autoreload 2Earth Data Login (Optional for This Tutorial)¶

These environment variabiles are needed to make the remote access work. The cookie file will be created with login procedure in the following step.

env = dict(GDAL_HTTP_COOKIEFILE=os.path.expanduser('~/.grimp_download_cookiejar.txt'),

GDAL_HTTP_COOKIEJAR=os.path.expanduser('~/.grimp_download_cookiejar.txt')) # Modify path as needed

os.environ.update(env)If this is a first login, you will be prompted for your earthdata user name and passwd. A netrc file will be created or updated so the information does not need to be retyped on subsequent login attempts.

myLogin = grimp.NASALogin()

myLogin.view()Getting login from ~/.netrc

Already logged in. Proceed.

from IPython.display import HTML

HTML('<iframe width="560" height="315" src="https://www.youtube.com/embed/0kIcSXkxaxI" \

frameborder="0" allow="accelerometer" allowfullscreen></iframe>')Overview of COGs and GrIMP product exploratory tool¶

GrIMP velocity products are stored at NSIDC in cloud-optimized GeoTIFF (COG) format with each component stored as a separate band (e.g., vx, vy, or vv). In this notebook, we focus on the velocity data, but the error and x and y displacement components can be similarly processed.

For reading the data, the products are specified with a single root file name (e.g., for filename.vx(vy).version.tif). For example, the version 3 annual mosaic from December 2017 to November 2018 is specified as GL_vel_mosaic_Annual_01Dec17_30Nov18_*_v03.0. For locally stored files, the corresponding path to the data must be provided. For remote data, the https link is required. In the following example, the initialSearch() function populates a gui search window, which allows the search parameters to be altered.

Note, you may need to run this cell block twice to initially see the list and total file number repopulate to your selections.

# mode='nisar' for full Greenland mosaics

# mode='subsetter' for all raster products

# mode='image' for just image products

# mode='none' for all products

myUrls = grimp.cmrUrls(mode='nisar')

display(myUrls.initialSearch())This cell will pop up a search tool for the GrIMP products, which will run a predefined search for the annual products. While in principle, other products (e.g., six-day to quarterly can be retrieved, the rest of the notebook will need some modifications to accomodate).

The results from the search can be returned with the following method call:

myUrls.getCogs(replace='vv', removeTiff=True)['https://n5eil01u.ecs.nsidc.org/DP4/MEASURES/NSIDC-0725.004/2014.12.01/GL_vel_mosaic_Annual_01Dec14_30Nov15_*_v04.0',

'https://n5eil01u.ecs.nsidc.org/DP4/MEASURES/NSIDC-0725.004/2015.12.01/GL_vel_mosaic_Annual_01Dec15_30Nov16_*_v04.0',

'https://n5eil01u.ecs.nsidc.org/DP4/MEASURES/NSIDC-0725.004/2016.12.01/GL_vel_mosaic_Annual_01Dec16_30Nov17_*_v04.0',

'https://n5eil01u.ecs.nsidc.org/DP4/MEASURES/NSIDC-0725.004/2017.12.01/GL_vel_mosaic_Annual_01Dec17_30Nov18_*_v04.0',

'https://n5eil01u.ecs.nsidc.org/DP4/MEASURES/NSIDC-0725.004/2018.12.01/GL_vel_mosaic_Annual_01Dec18_30Nov19_*_v04.0',

'https://n5eil01u.ecs.nsidc.org/DP4/MEASURES/NSIDC-0725.004/2019.12.01/GL_vel_mosaic_Annual_01Dec19_30Nov20_*_v04.0',

'https://n5eil01u.ecs.nsidc.org/DP4/MEASURES/NSIDC-0725.004/2020.12.01/GL_vel_mosaic_Annual_01Dec20_30Nov21_*_v04.0']The search returned values for the speed products, which are indicated by a ‘vv’ in the name. In the above call, the ‘vv’ is replaced with a wildcard, ‘*’, using the replace='vv' keyword. The subsequent programs will also automatically append the tif, so the removeTiff=True is used to remove the ‘.tif’ extension.

Preload Data and Select Bands¶

The cells in this section read the cloud-optimized geotiffs (COG) headers and create nisarVelSeries or nisarImageSeries objects for velocity or image data, respectively. The bands (e.g., vx, vy) can be selected at this stage.

More detail can found on working with these tools in the workingWithGrIMPVelocityData and workingWithGrIMPImageData notebooks.

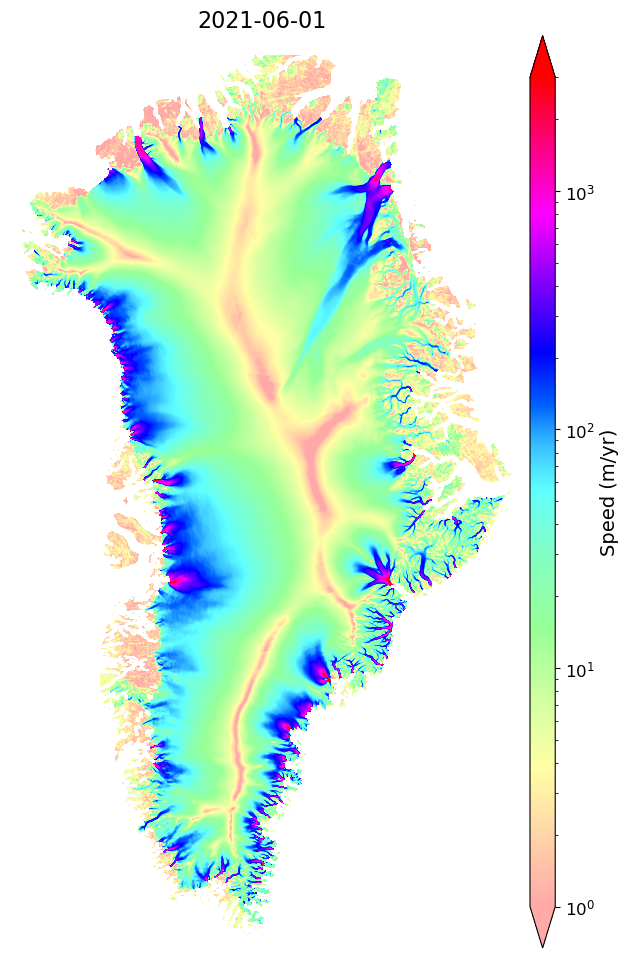

View example mosaic product from local file¶

For the purpose of this tutorial, we will read in a file saved locally to the shared directory to visualize an example of the annual velocity mosaic. For local file acccess, we set url=False, otherwise everything else is the same as for remote access. The data are stored at several resolutions, which can be specified by the overviewLevel keyword. Full resolution is overviewLevel=-1. To plot a full map of Greenland this resolution would slow things down, so we can pick a lower resolution with values of 0, 1, 2..., with each increment halving the resolution. The cell block below uses a function in the nisar package to initialize a nisarVelSeries instance:

myVelSeries = nisar.nisarVelSeries()

# Path to local file

localFile = '/home/jovyan/shared-public/GeoTIFF/GL_vel_mosaic_Annual_01Dec20_30Nov21_*_v04.0'

# This builds a series, which is specified by a list of files. So with 1 file, the brackets are used to pass a list rather than a single file name

myVelSeries.readSeriesFromTiff([localFile], useDT=False, useErrors=False, readSpeed=True, url=False, useStack=True, overviewLevel=1)

myVelSeries.xrThe actual data are not downloaded at this stage, but the xarray internal to each object will read the header data of each product so it can efficiently access the data during later downloads.

Plot velocity map¶

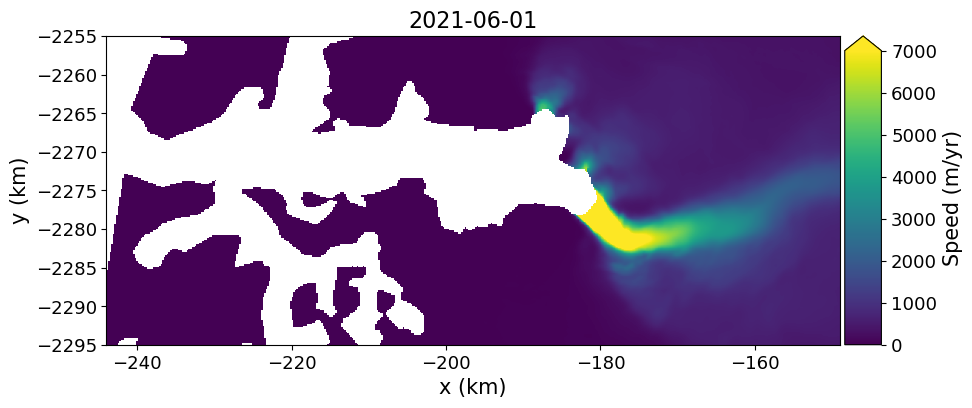

This will plot the velocity map for the annual mosaic nearest the user-provided date (in this example there is only one date, so the date could be omitted). Below, we will use mid 2021. Note, since this series has only one year, the date could have been omitted.

myVelSeries.displayVelForDate('2021-06-01',labelFontSize=14, plotFontSize=12, titleFontSize=16,

vmin=1, vmax=3000, scale='log',colorBarPad=0.15, units='km', axisOff=True)[########################################] | 100% Completed | 411.48 ms

[########################################] | 100% Completed | 101.48 ms

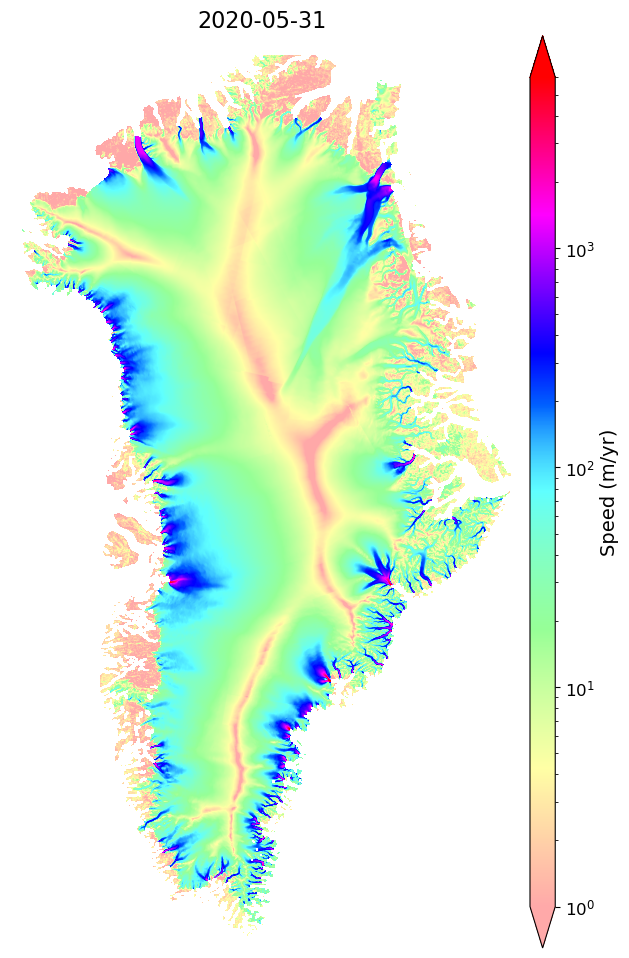

Remote Example for 2020 velocity map (optional)¶

myVelSeriesRemote = nisar.nisarVelSeries()

myVelSeriesRemote.readSeriesFromTiff(myUrls.getCogs(replace='vv', removeTiff=True), url=True, readSpeed=False, useErrors=False, useDT=False, overviewLevel=1)

myVelSeriesRemote.displayVelForDate('2020-06-01',labelFontSize=14, plotFontSize=12, titleFontSize=16,

vmin=1, vmax=6000,scale='log',colorBarPad=0.15,units='km', axisOff=True)[########################################] | 100% Completed | 3.46 sms

[########################################] | 100% Completed | 202.99 ms

myVelSeriesRemote.subset📐 Spatial subsetting routines¶

🚨These files can be large!

Below are functions that allow you to quickly subset the data by spatial bounds, including the functions you can use to interactively select an ROI when exploring datasets remotely

Method 1: Interactive ROI Selection¶

Run the next the tool below to select the bounding box (or modify a manually selected box), which will display a SAR image map. Depending on network speed, it could take a few seconds to a minute to load the basemap. Use the box tool in the plot menu to select a region of interest.

Method 2: Manual Selection¶

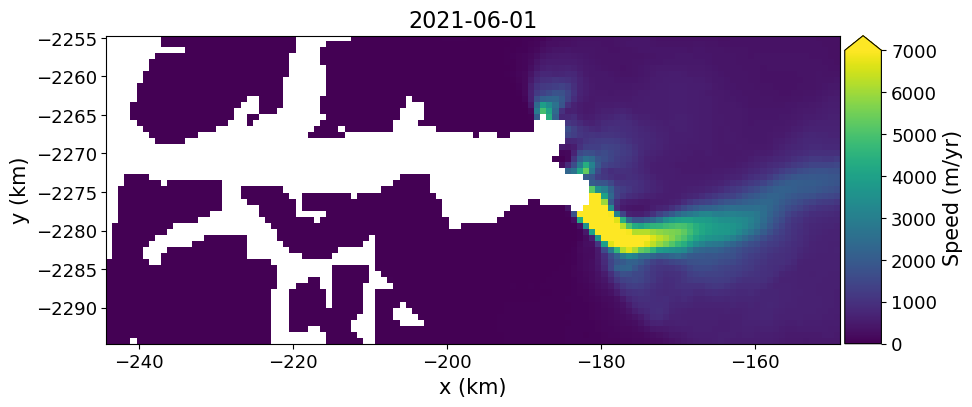

The coordinates for a bounding box can be manually entered by modifying the cell below with the desired values. Even if not using interactive selection, running that step displays the manually selected box coordinates on a radar map of Greenland. Note by default, coordinates are rounded to the nearest kilometer. We will use numpy to define a specific x and y bounds of our ROI for the tutorial today.

values = np.around([-243889, -2295095, -149487, -2255127], decimals=-3)

xyBounds = dict(zip(['minx', 'miny', 'maxx', 'maxy'], values))

xyBounds{'minx': -244000, 'miny': -2295000, 'maxx': -149000, 'maxy': -2255000}With this bounding box, we can subset the data to pull out the area of interest.

myVelSeries.subSetVel(xyBounds)

myVelSeries.subsetThe data in the above example map are stored as an Xarray, velMap.subset, here representing data clipped spatially by xyBounds. The full time series of Greenland-wide mosiacs can reach sizes of > 8GB and may take awhile to download! Because of the lazy open mentioned above, the data have not been downloaded or read from the disk yet. Before applying the final subset, its useful to examine the size of the full data (virtual) array. If the loadDataArray step was sucessful, running the cell above will provide details on the size and organization of the full xarray (prior to any download).

We can now view this image with displayVelForDate method.

fig, ax = plt.subplots(1, 1, figsize=(10, 6))

myVelSeries.displayVelForDate('2021-06-01', units='km', ax=ax) # Note the x, y units can either be 'm' or 'km'[########################################] | 100% Completed | 101.49 ms

[########################################] | 100% Completed | 101.36 ms

[########################################] | 100% Completed | 101.56 ms

[########################################] | 100% Completed | 101.21 ms

[########################################] | 100% Completed | 101.22 ms

Remote Access and Downloading to Local Memory¶

myVelSeriesFullRes = nisar.nisarVelSeries()

myVelSeriesFullRes.readSeriesFromTiff(myUrls.getCogs(replace='vv', removeTiff=True), url=True, readSpeed=False, useErrors=False, useDT=False, overviewLevel=-1) # This is now -1 to download full res data

#

myVelSeriesFullRes.xrmyVelSeriesFullRes.subSetVel(xyBounds)

myVelSeriesFullRes.subsetNow Download the data to local memory.

myVelSeriesFullRes.loadRemote()

myVelSeriesFullRes.subsetfig, ax = plt.subplots(1, 1, figsize=(10, 6))

myVelSeriesFullRes.displayVelForDate('2021-06-01', units='km', ax=ax)

Saving to NetCDF¶

Displaying and Plotting Data¶

The remainder of the tutorial focuses on creating publication ready plots.

Use predownloaded subset for plotting¶

Now, let’s initialize another nisarVelSeries instance, and reload the previously downloaded data file (saved as netcdf) to that instance. ⬇

myVelReload = nisar.nisarVelSeries()

myVelReload.readSeriesFromNetCDF('./res/steenstrupGlacier_annVel.nc')

myVelReload.loadRemote()

myVelReload.xr #confirm dimensions of read-in subsetted velocitiesThis time the loadRemote function loaded the data into local memory. The formatting for xarray display is no a little cruder than the remote dask version.

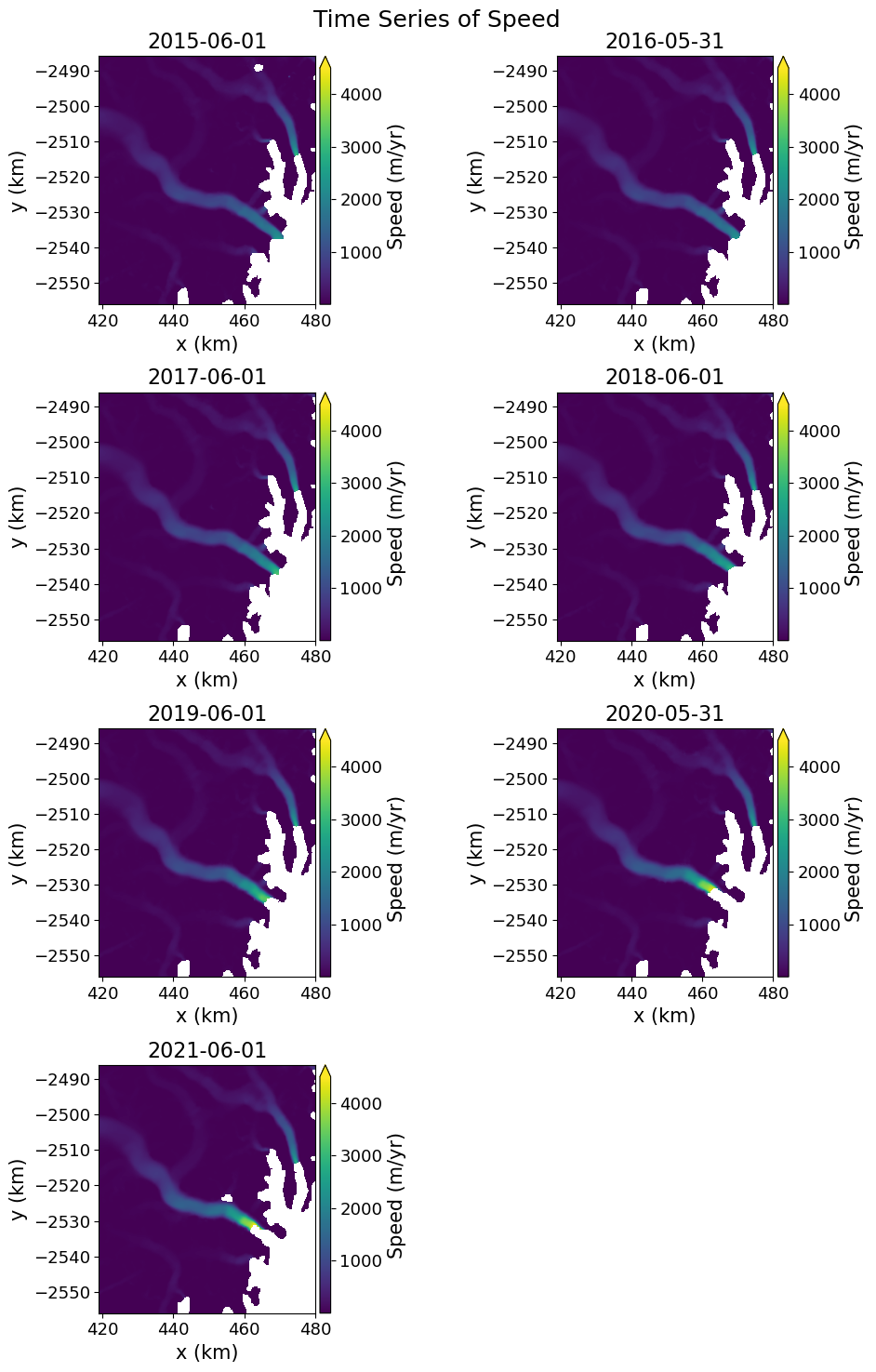

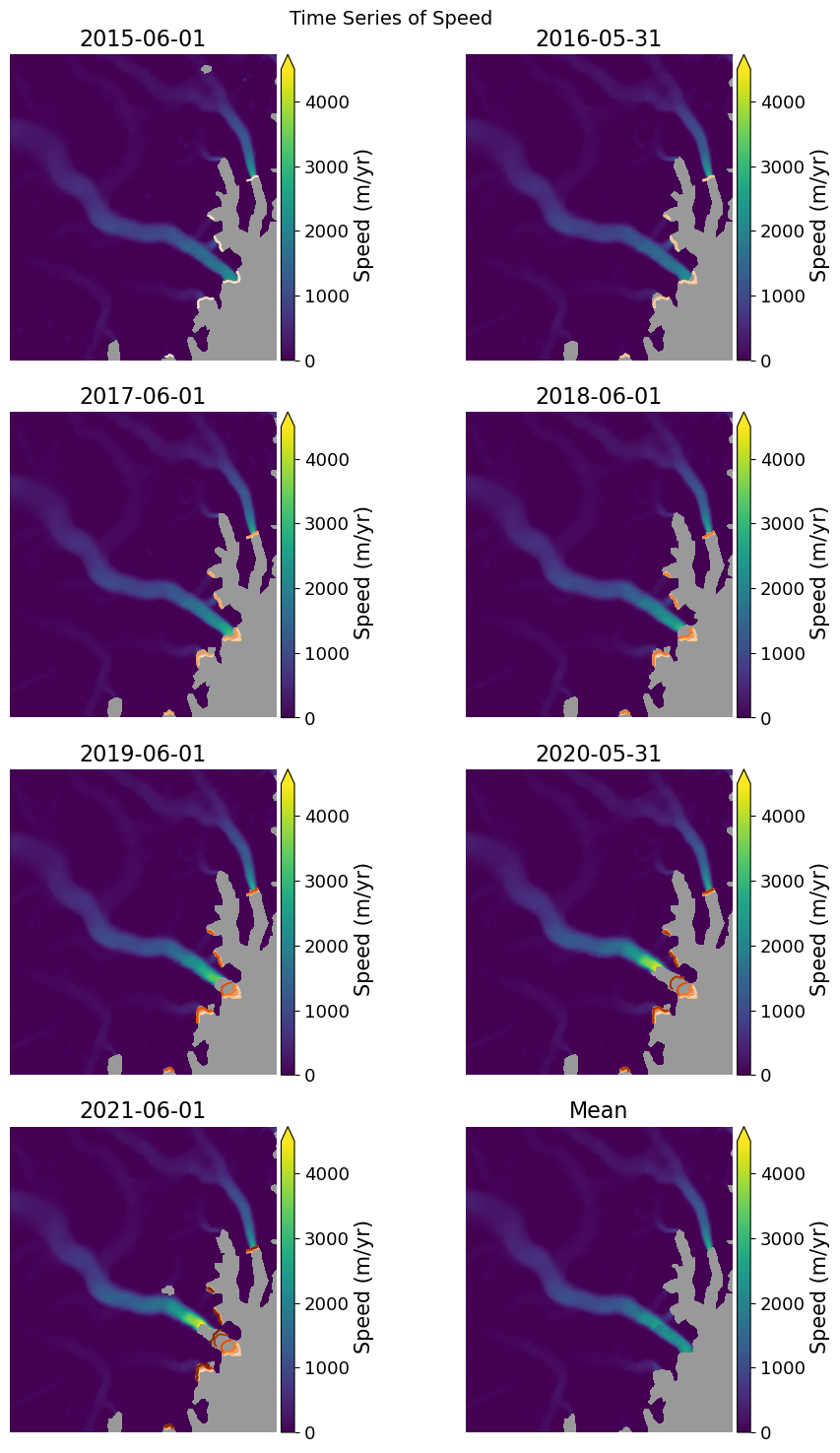

🖍 Plot the annual velocity maps for Steenstrup Glacier (the glacier within our example spatial subset).¶

This example loops over the time variable and plots the data for each year.

fig, axes = plt.subplots(4, 2, figsize=(10,15))

for ax, date in zip(axes.flatten(), myVelReload.time):

myVelReload.displayVelForDate(date=date, ax=ax, autoScale=False,vmin=1, vmax=4500, units='km', axisOff=False, scale='linear')

axes[-1, -1].axis('off'); #remove any empty axes if odd number of years

fig.suptitle('Time Series of Speed', fontsize=18)

fig.tight_layout()

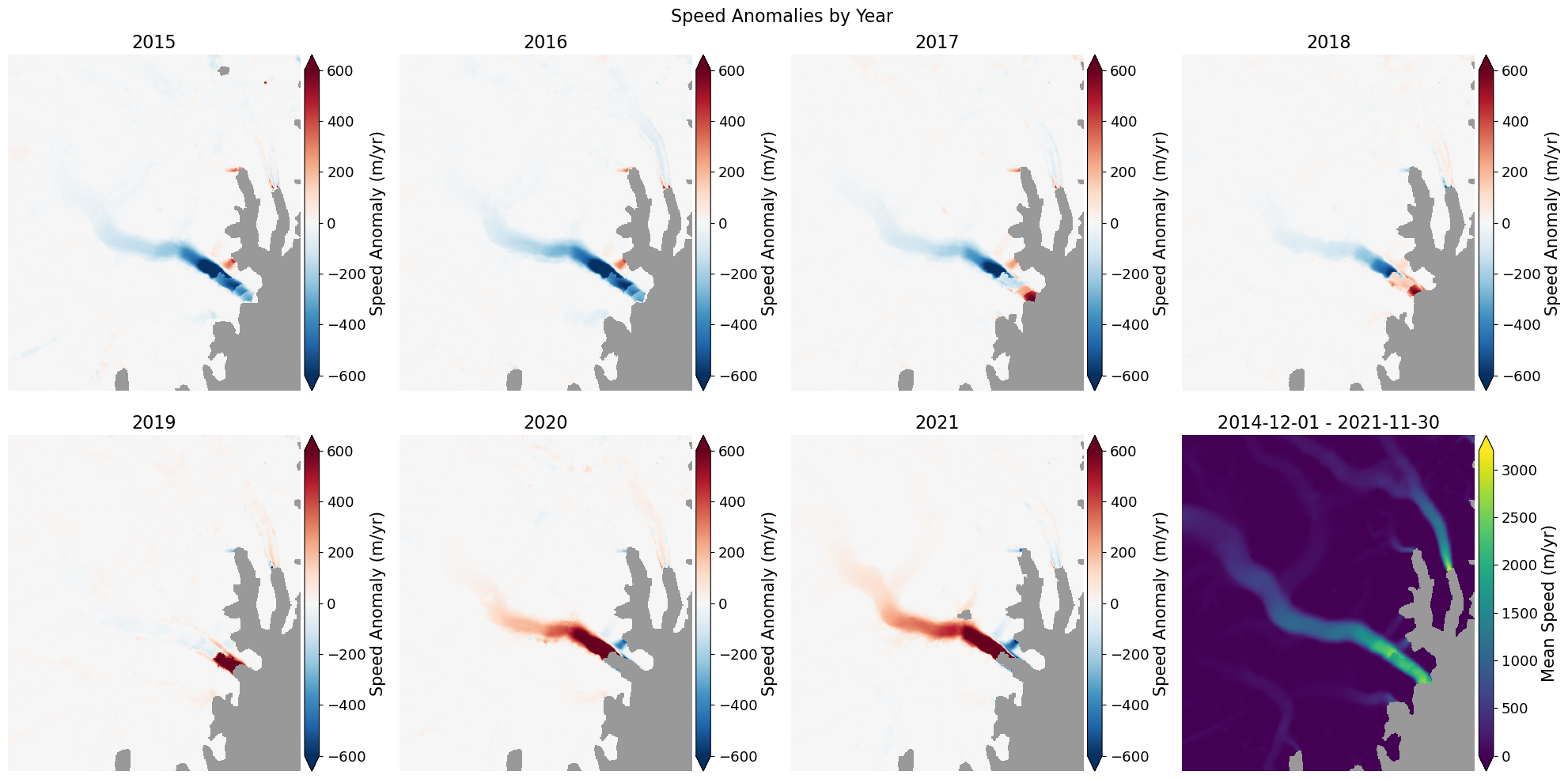

📊 Quickly plot velocity anomalies¶

There are functions to compute statistics such as mean and standard of the stack as well as the anomalies, which are the difference between the speed at each step and the mean for full series. The mean and anomalies are calculated as:

velAnomaly = myVelReload.anomaly() # These results are saved velSeries instances

velMean = myVelReload.mean()These results can be plotted with these steps:

# Plot the anomaly for each year

fig, axes = plt.subplots(2, 4, figsize=(20,10))

# Loop over each anomaly

for date, ax in zip(velAnomaly.time, axes.flatten()):

velAnomaly.displayVelForDate(date, ax=ax, units='m', vmin=-600, vmax=600, autoScale=False, axisOff=True,

title=date.strftime("%Y"), cmap='RdBu_r', colorBarLabel='Speed Anomaly (m/yr)',

extend='both', backgroundColor=(0.6, 0.6, 0.6))

# Show the mean in the last panel

velMean.displayVelForDate(date, ax=axes[1,3], units='m', autoScale=True, axisOff=True,

midDate=False, colorBarLabel='Mean Speed (m/yr)',

extend='both', backgroundColor=(0.6, 0.6, 0.6))

#

fig.suptitle('Speed Anomalies by Year', fontsize=16)

fig.tight_layout()

📈 Explore multiyear trends with Quick tool¶

Use the interactive inspection tool in the cell block below to quickly visualize multiyear trends ⬇

myVelReload.inspect()📍 Combine GrIMP raster and vector data¶

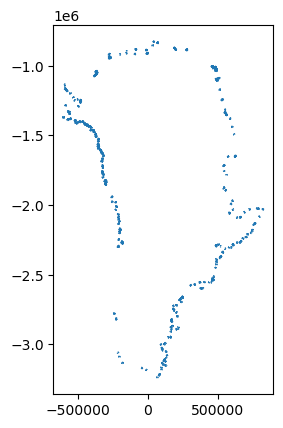

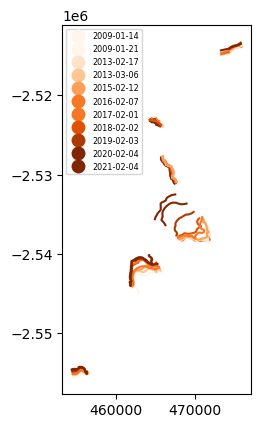

Let’s read in digitized annual glacier terminus traces within this ROI. The compiled.shp file is an aggregate of 2009-2021 annual terminus data from GrIMP product NSIDC-0642. Run the cell block below to quickly view the Greenland-wide dataset.

fronts = gpd.read_file("./shpfiles/compiled.shp")

fronts = fronts.to_crs("EPSG:3413") # Convert to same crs as velocity data

fronts.plot()<Axes: >

Now we will use the bounds from the velSeries to create a box to filter out only the ice fronts in our region of interest.

# define a polygone based on the xy extents of our reloaded velocity data

poly = box(*myVelReload.bounds())

clippedfronts = gpd.clip(fronts, poly)

Or = plt.colormaps['Oranges'].resampled(8)

clippedfronts.plot(column = "SourceDate",cmap=Or,legend=True,legend_kwds={'loc':'upper left','fontsize':'xx-small'})<Axes: >

Now we will plot the ice fronts over the velocity maps.

fig, axes = plt.subplots(4, 2, figsize=(10,15))

#define first year of time series

Yr1 = myVelReload.time[0].year

for ax, date in zip(axes.flatten(), myVelReload.time):

# vmax will limit the data to that value (the default is 7000 m/yr). But if autoscale is enabled

# it will limit the output to the lesser of the maximum data value or vmax.

# To force a specific range for all plots, specify vmin, vmax, and set autoscale to False.

myVelReload.displayVelForDate(date=date, ax=ax, axisOff=True, autoScale=False,

vmin=0, vmax=4500, backgroundColor=(0.6,0.6,0.6))

yr = date.year

clipYr = clippedfronts.loc[pd.to_datetime(clippedfronts["SourceDate"]).dt.year <= yr]

#sort shapefile features by date

SortedTerm = clipYr.sort_values(by='SourceDate', ascending=True)

#plot front positions up through subplot year through time

for i in range(Yr1, yr+1):

SortedTerm.loc[pd.to_datetime(SortedTerm["SourceDate"]).dt.year == i].plot(

ax=ax, column='SourceDate', color=Or(i-(Yr1-1)), zorder=i-(Yr1-2))

velMean.displayVelForDate(date=date, ax=axes[-1, -1], axisOff=True, autoScale=False, vmin=0, vmax=4500,

backgroundColor=(0.6,0.6,0.6), title='Mean')

fig.suptitle('Time Series of Speed', fontsize=14)

fig.tight_layout()

plt.show()

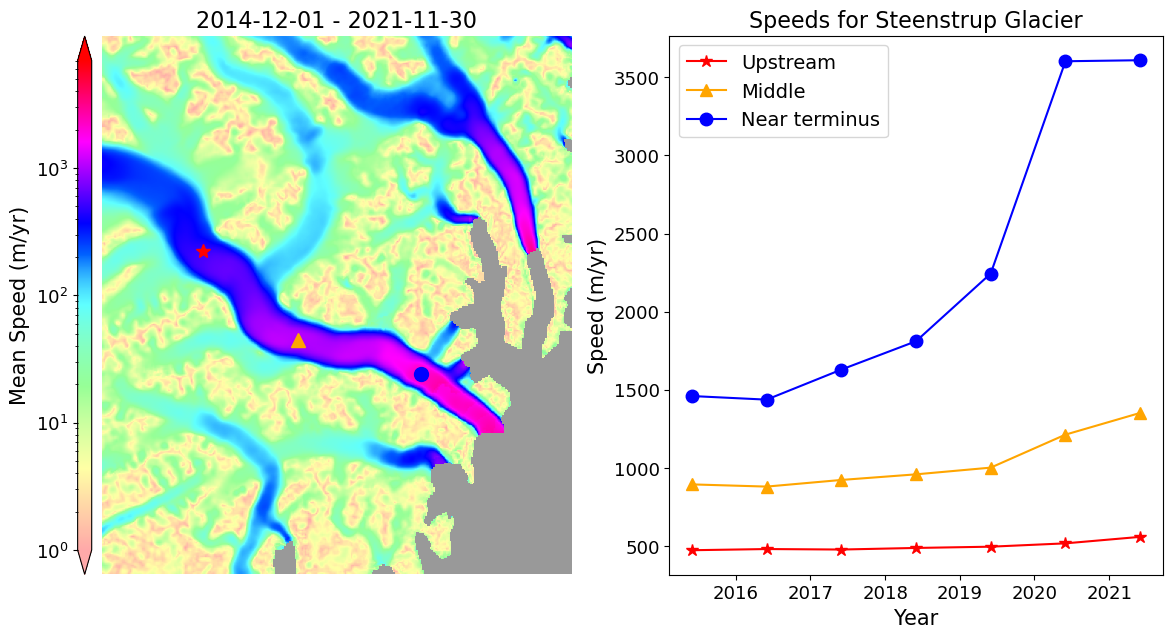

Point Plots¶

The velSeries instances can be used to plot points at specified locations. First we define the points and the corresponding colors, symbols, and labels.

xpts, ypts = [432.2, 444.5, 460.5], [-2514, -2525.5, -2530]

colors = ['r', 'orange', 'b']

symbols = ['*', '^', 'o']

labels = ['Upstream', 'Middle', 'Near terminus']Now plot time series at each point.

fig, axes = plt.subplots(1, 2, figsize=(14,7))

mapAxes, plotAxes = axes

# Map plot

velMean.displayVelForDate(ax=mapAxes, units='km', autoScale=True, axisOff=True, scale='log',

midDate=False, colorBarLabel='Mean Speed (m/yr)',

extend='both', backgroundColor=(0.6, 0.6, 0.6), colorBarPosition='left', vmin=1, colorBarSize='3%', colorBarPad=.1)

# Loop to plot points

for x, y, symbol, color, label in zip(xpts, ypts, symbols, colors, labels):

# plot points on map

mapAxes.plot(x, y, symbol, color=color, markersize=10)

# plot values

myVelReload.plotPoint(x, y, ax=plotAxes, band='vv', marker=symbol, color=color, linestyle='-',

units='km', label=label, markersize=9)

# Finish plots

myVelReload.labelPointPlot(xLabel='Year', yLabel='Speed (m/yr)', ax=plotAxes, title='Speeds for Steenstrup Glacier')

plotAxes.legend(fontsize=14)

Similar plots for profiles can be produced with myVelSeries.plotProfile(). See Flowlines and workingWithGrIMPVelocity on the GrimpNotebooks repository.